Are you wasting your time with A/B testing? A lot of businesses make A/B testing mistakes that cost them time and money they can’t afford, because they don’t understand what A/B tests are and how to run them correctly.

A/B testing is an amazing way to improve your conversions, for any business. We’ve seen OptinMonster customers use split testing to easily get more sales qualified leads, increase their email list, and even boost conversions by 1500%.

BUT if you’re making one of the common split testing mistakes listed below, your split tests could be doing more harm than good. Qubit says that badly done split tests can make businesses invest in unnecessary changes, and can even hurt their profits.

The truth is, there’s a lot more to A/B testing than just setting up a test. If you really want to see the improvements that split testing can bring, you’ll need to run your tests the right way, and avoid the errors that undermine your results.

In this article, you’ll discover the A/B testing mistakes many businesses make so you can avoid them, and learn how to use split testing the RIGHT way to discover the hidden strategies that can skyrocket your conversions.

Let’s get started!

1. Split Testing the Wrong Page

One of the biggest problems with A/B testing is testing the wrong pages. It’s important to avoid wasting time, resources, and money with pointless split testing.

How do you know if you should run a split test? If you’re marketing a business the answer is easy: the best pages to split test are the ones that make a difference to conversions, and result in more leads or more sales.

Hubspot says the best pages to optimize on any site are the most visited pages:

- Home page

- About page

- Contact page

- Blog page

Product pages are especially important for eCommerce sites to test, especially your top-selling products.

In other words, if a page isn’t part of your marketing or sales funnel, there’s little point in testing it (unless you want to add it to those funnels).

If making a change won’t affect the bottom line, move on, and test a page that’ll boost your income instead.

2. Having an Invalid Hypothesis

One of the most important A/B testing mistakes to avoid is not having a valid hypothesis.

What’s an A/B testing hypothesis? An A/B testing hypothesis is a theory about why you’re getting particular results on a web page and how you can improve those results.

Let’s break this down a little more. To form a hypothesis, you need to:

- Step 1: Pay attention to whether people are converting on your site. You’ll get this information from analytics software that tracks and measures what people do on your site. For example, this’ll tell you whether people are clicking on your call to action, signing up for your newsletter or completing a purchase.

- Step 2: Speculate about why certain things are happening. For example, if people arrive on your landing page, but don’t fill out a form to grab your lead magnet, or the page has a high bounce rate, then maybe the call to action is wrong.

- Step 3: Come up with some possible changes that might result in more of the behavior you want on a particular page. For example, in the scenario above, you could test a different version of your call to action.

- Step 4: Work out how you will measure success so you’ll know for sure if a particular change makes a difference to conversions. This is an essential part of the A/B testing hypothesis.

Here’s how you’d put that all together, sticking with our earlier example:

- Observation: We notice that although there’s a lot of traffic to our lead magnet landing page, the conversion rate is low, and people aren’t signing up to get the lead magnet.

- Possible reason: We believe this is because the call to action isn’t clear enough.

- Suggested fix: We think we can fix this by changing the text on the call to action button to make it more active.

- Measurement: We’ll know we’re right if we increase signups by 10% in the month following making the change.

Note that you need all the elements for a valid hypothesis: observing data, speculating about reasons, coming up with a theory for how to fix it, and measuring results after implementing a fix.

3. Split Testing Too Many Items

Here’s one of the key A/B testing mistakes lots of people make: trying to split test too many items at once.

It might seem like testing multiple page elements at once saves time, but it doesn’t. What happens is that you’ll never know which change is responsible for the results.

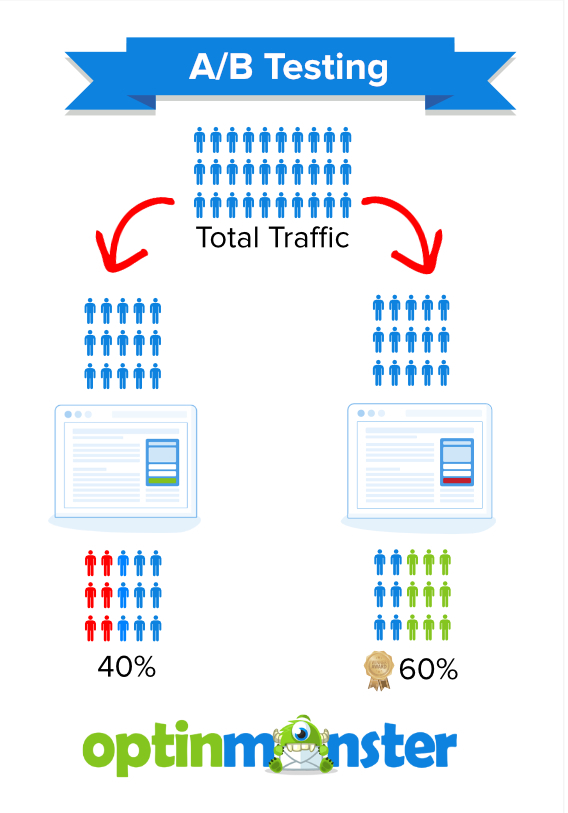

We’ll probably mention it a few times because it’s so important: split testing means changing one item on a page and testing it against another version of the same item, as illustrated here:

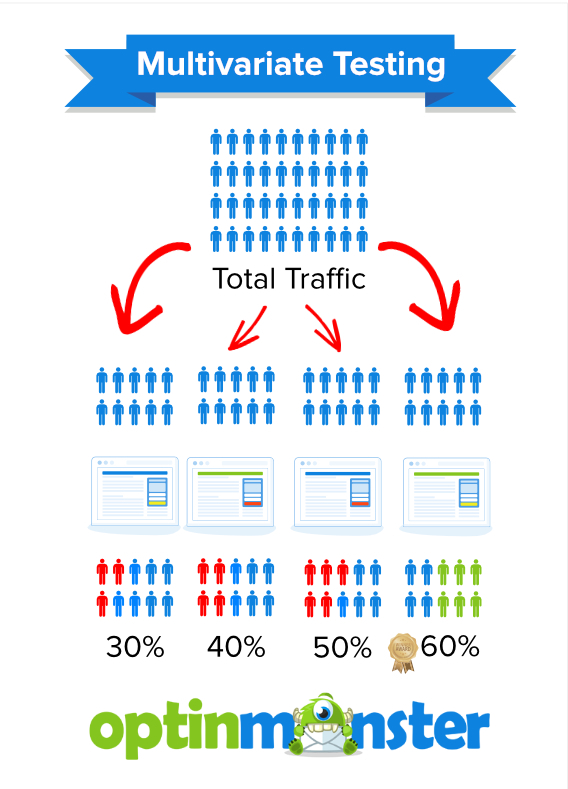

The minute you change more than one item at a time, you need a multivariate test, explained in detail in our comparison of split testing versus multivariate testing.

Multivariate testing can be a great way to test a website redesign where you have to change lots of page elements. But you can end up with a lot of combinations to test, and that takes time you might not want to invest. Multivariate testing also only works well for high traffic sites and pages.

In most cases, a simple split test will get you the most meaningful results.

4. Running Too Many Split Tests at Once

When it comes to A/B testing, keep it simple.

It’s fine to run multiple split tests. For example, you can get meaningful results by testing three different versions of your call to action button. (Running these tests isn’t the same as multivariate testing, because you’re still only changing a single item for each test.)

Most experienced conversion optimizers recommend that you don’t run more than four split tests at a time. One reason is that the more variations you run, the bigger the A/B testing sample size you need. That’s because you have to send more traffic to each version to get reliable results.

This is known as A/B testing statistical significance (or, in everyday terms, making sure the numbers are large enough to actually have meaning), which you can check with tools described in our split testing guide.

5. Getting the Timing Wrong

With A/B testing, timing is everything, and there are a few classic A/B testing mistakes related to timing…

Comparing Different Time Periods

For example, if you get most of your site traffic on a Wednesday, then it doesn’t make sense to compare split testing results for that day with the results on a low-traffic day.

Even more important, if you’re an eCommerce retailer, you can’t compare split testing results for the holiday boom with the results you get during the January sales slump.

In both cases, you’re not comparing like with like, so you won’t get reliable results. The solution is to run your test for comparable periods, so you can accurately assess whether any change has made a difference.

It’s also important to pay attention to external factors that might affect your split testing results. If you’re marketing locally and the power goes out due to a natural disaster, then you won’t get the traffic or results you expect. And a winter-related offer just won’t have the same impact as summer approaches, says Small Business Sense.

Not Running the Test for Long Enough

You also need to run an A/B test for a certain amount of time to achieve A/B testing statistical significance and an industry-standard 95% confidence rating in the results. That 95% number means you can be pretty sure your results are accurate, and you’re safe to make new marketing decisions based on that data.

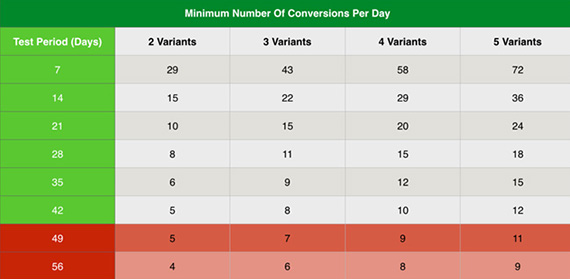

As you’ll see in tip #7, that time varies depending on the number of expected conversions and the number of variants. If you’re running 2 variants and expect 50 conversions, your testing period will be shorter than if you have 4 variants and are looking for 200 conversions.

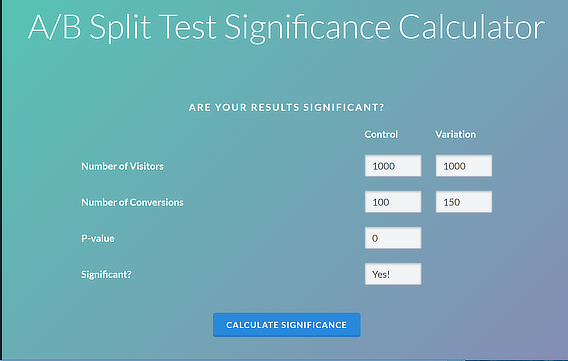

Here’s a chart from Visual Website Optimizer to help you work out whther you’ve achieved statistical significance for your A/B test. You enter the number of visitors and conversions for your test, and it calculates the statistical significance. It also includes the P-value, a statistical value that also helps underline the reliability of your stats.

Testing Different Time Delays

Timing can also affect the success of your OptinMonster split tests. One of the A/B testing mistakes we see people make with their campaigns is to vary the timing on their campaigns.

If one campaign shows after your visitor’s been on the page for 5 seconds, and another after 20 seconds, that’s not a real split test.

That’s because you’re not comparing similar audiences. Typically, a lot more people will stay on a page for 5 seconds than 20 seconds.

As a result, you’ll see different impressions for each campaign, and the results won’t make sense or be useful to you.

Remember, for a true split test, you have to change one item on page, NOT the timing. But if you want to experiment with timing your optins, this article on popups, welcome gates and slide-in campaigns has some suggestions.

6. Working with the Wrong Traffic

We mentioned A/B testing statistical significance earlier. As well as getting the testing period right, you also have to have the right amount of traffic. Basically, you need to test your campaigns with enough people to get meaningful results.

If you have a high-traffic site, you’ll be able to complete split tests quickly, because of the constant flow of visitors to your site.

If you have a low-traffic site, or sporadic visits, you’ll need a bit longer.

It’s also important to split your traffic the right way so you really ARE testing like against like. Some split testing software allows you to manually allocate the traffic you’re using for the test, but it’s easiest to split traffic automatically to avoid the possibility of getting unreliable results from the wrong kind of split.

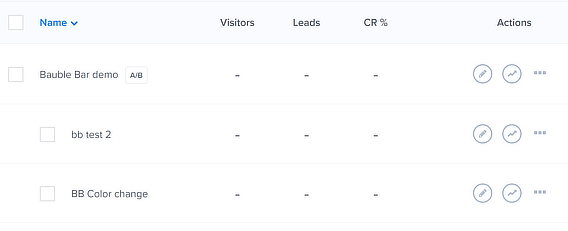

If you’re using OptinMonster for A/B testing, it’s easy to get this right, because OptinMonster automatically divides your traffic according to the number of tests you’re running.

7. Testing Too Early

One common mistake with A/B testing is running the split test too soon.

For example, if you start a new OptinMonster campaign, you should wait a bit before starting a split test. At first, there’s no point in creating a split test because you won’t have data to create a baseline for comparison. You’d be testing against nothing, which is a waste of your time.

Instead, run your new campaign for at least a week and see how it performs before you start tweaking and testing. You can use this chart from Digital Marketer to work out your ideal testing period, based on the expected number of conversions.

8. Changing Parameters Mid-Test

One way to really mess up your A/B testing routine is to change your setup in the middle of the test.

This happens if you:

- Decide to change the amount of web traffic that sees the control or the variation.

- Add or change a variation before the end of the ideal A/B testing period, shown in the chart above.

- Alter your split testing goals.

As Wider Funnel says, sudden changes invalidate your test and skew your results.

If you absolutely need to change something, then start your test again. It’s the only way to get results you can rely on.

9. Measuring Results Inaccurately

Measuring results is as important as testing, yet it’s one of the areas where people make costly A/B testing mistakes. If you don’t measure results properly, you can’t rely on your data, and can’t make data-driven decisions about your marketing.

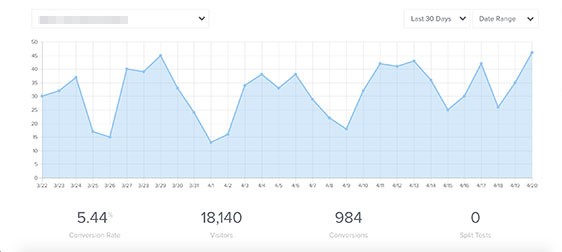

One of the best ways to solve this is to ensure that your A/B testing solution works with Google Analytics.

OptinMonster integrates with Google Analytics, so you can see accurate data on traffic and conversions in your dashboard.

Here’s how you integrate Google Analytics with OptinMonster so you can get actionable insights. You can also set up your own Google Analytics dashboard to collect campaign data with the rest of your web metrics.

10. Using Different Display Rules

One way to really mess up your OptinMonster A/B testing results is making arbitrary changes to the display rules.

OptinMonster has powerful display rules that affect when campaigns show, what timezone and location they show in, who sees them, and more.

But remember that split tests are about changing one element on the page. If you change the display rules so that one optin shows to people in the UK and another to people in the US, that’s not a like for like comparison.

If one campaign is a welcome gate, and another is an exit intent campaign, that’s not a like for like comparison. If one campaign shows at 9am and the other at 9pm, that’s not … well, you get the idea.

If your campaigns don’t show at the same time to the same type of audience, you won’t get reliable data. Check out our guide to using Display Rules with OptinMonster for help with setting rules for when campaigns should show and who should see them.

11. Running Tests on the Wrong Site

Here’s one of the silliest A/B testing mistakes you’d think most people would catch.

Many people test their marketing campaigns on a development site, which is a great idea. What’s not so great is that sometimes they forget to move their chosen campaigns over to the live site, and then it looks like their split tests aren’t working.

That’s because the only people visiting the development site are web developers, not their customers. Luckily, making the switch is an easy fix, so if you’re not seeing the results you expect, it’s worth checking for this issue.

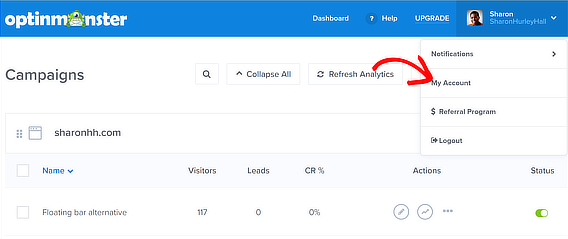

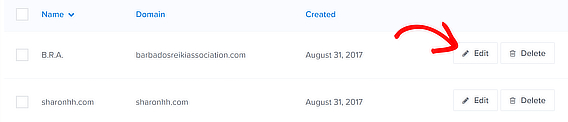

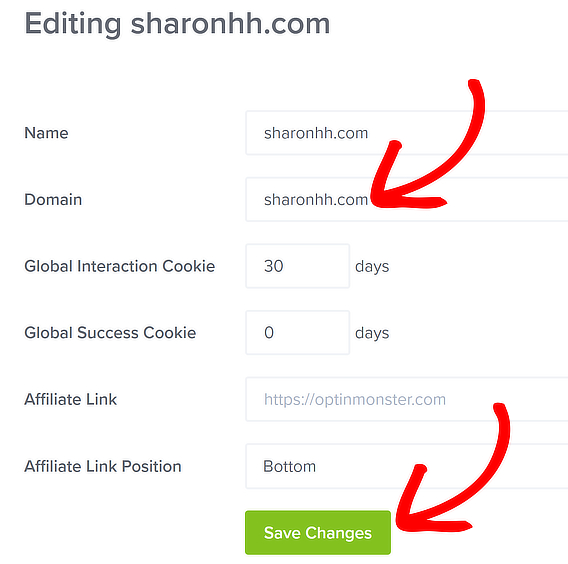

If you’re using OptinMonster, here’s how you fix this issue:

Login to the OptinMonster dashboard, and click on your account icon to show the drop down menu. Navigate to My Account.

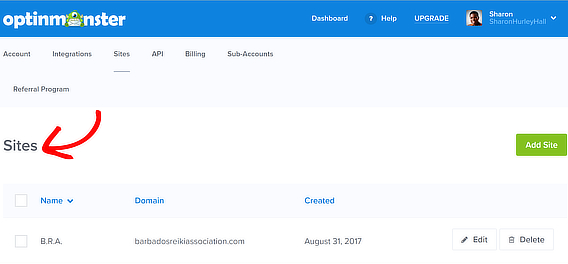

Go to Sites.

Choose the site you want to change and click edit.

Change the URL of the website from the dev site to the live site, then save your changes.

For more guidance, read our doc on how to add, delete, or edit a website in OptinMonster.

12. Giving Up on Split Testing

Some companies continually optimize their sites, which means they are always running tests and increasing conversions. Others, not so much.

One of the A/B testing mistakes you most want to avoid is stopping your test too early (for example, before the one-week minimum we mentioned earlier) or deciding that a test has failed and stopping it.

Giving up on split testing is a terrible error because you won’t get optimization benefits if you do.

First of all, there’s no such thing as a failed split test, because the goal of a test is to gather data. You can learn something from unexpected results that help you create new tests.

Second, if you’re giving up because you aren’t getting the results you expect, then go with what the data is telling you. Your gut feeling, also known as confirmation bias, could easily be wrong. You can always run a new test at the end of the testing period to see if different changes will achieve the results you hope for.

Third, don’t decide a test has run for long enough if you haven’t had enough time to get a decent sample size, and achieve statistical significance and a 95% confidence rating. Otherwise, you’ve wasted your time.

13. Blindly Following Split Testing Case Studies

It’s always great to read case studies and learn about the split testing techniques that worked for different companies.

But one A/B testing mistake you must avoid is copying what worked for others.

If that seems strange, hear us out…

It’s fine to use case studies to get ideas for how and what to split test, but be aware that what worked for another business might not work for yours, because your business is unique.

Instead, use A/B testing case studies as a starting point for creating your own A/B testing strategy. That’ll let you see what works best for your own customers, not someone else’s.

Now you know the dumb A/B testing mistakes that are wasting your time, effort, and money, you can get your split testing off to a good start. As you’ve seen, OptinMonster makes it easy to split test your marketing campaigns so you can get better results.

For more marketing inspiration, check out our expert roundup on creating great landing pages for your marketing campaigns and learn more about creating mobile optin campaigns that win you business.

And don’t forget to follow us on Twitter and Facebook for more in-depth guides.

Add a Comment